Proving Reality: Why AI Content Needs Digital Signatures

The Six-Fingered Thief

It began with a photo. A man in handcuffs, staring into a security camera. But when investigators zoomed in, they saw something impossible: six fingers. Perfectly shaped, perfectly lit, perfectly fake.

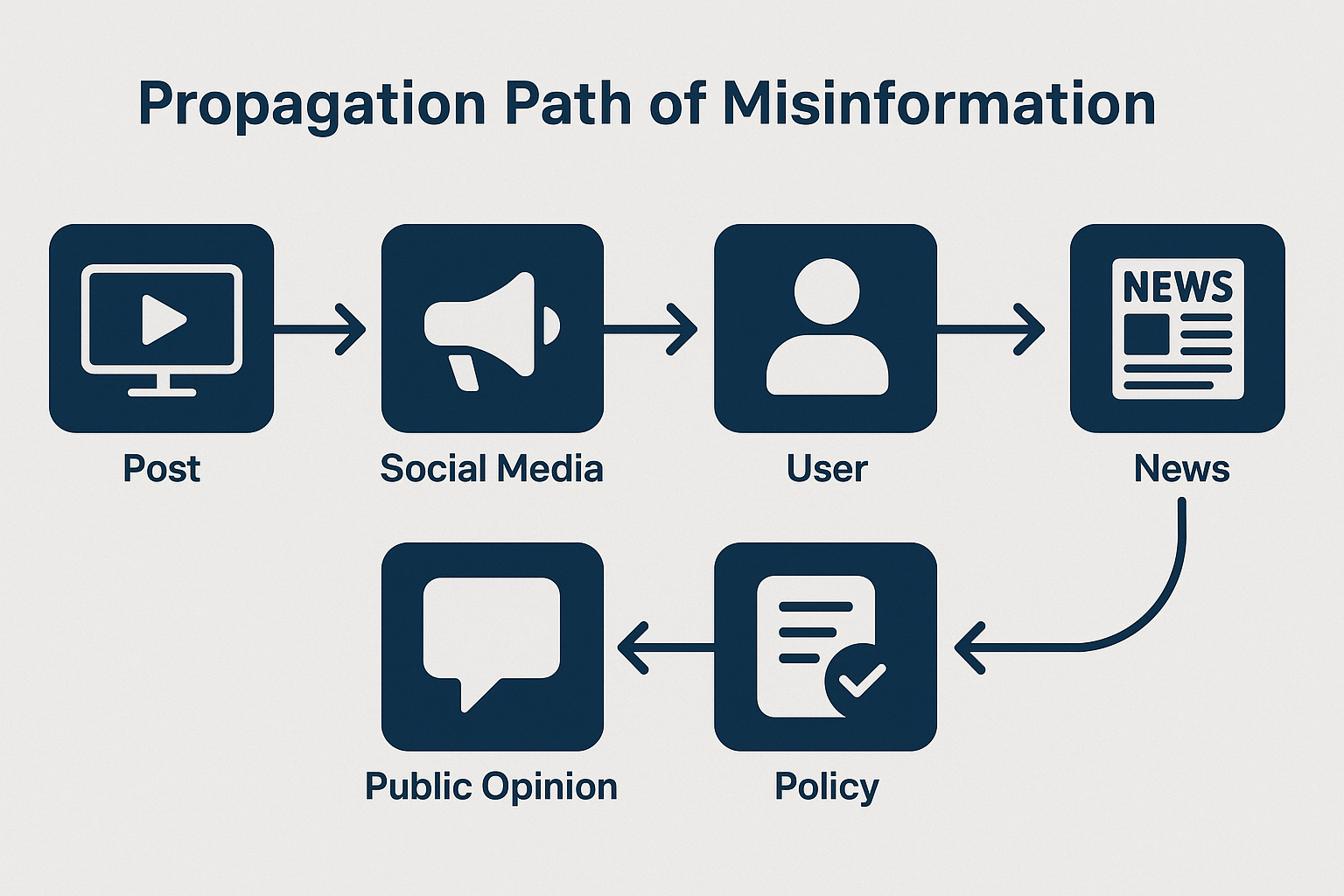

The “six-fingered thief” never existed. Yet his image spread across social media, shared more than two million times in a single day. Outrage grew, politicians commented, and even a local company’s stock price briefly dropped after being falsely linked to him.

That was the moment we realized something profound. In the age of generative AI, seeing is no longer believing. Reality itself now needs a way to prove it is real. When truth becomes synthetic, trust becomes a currency.

The New Crime Scene: AI and Law Enforcement

AI-generated evidence is already challenging courts and investigators. Prosecutors are struggling to verify whether footage or images are genuine, while defense lawyers have begun using the argument that “it could be fake” even when the content is real.

Europol warns of rapidly increasing AI-enabled fraud and synthetic identity crime. This problem does not just complicate trials. It undermines accountability itself.

https://www.europol.europa.eu/media-press/newsroom/news/dna-of-organised-crime-changing-and-so-threat-to-europe

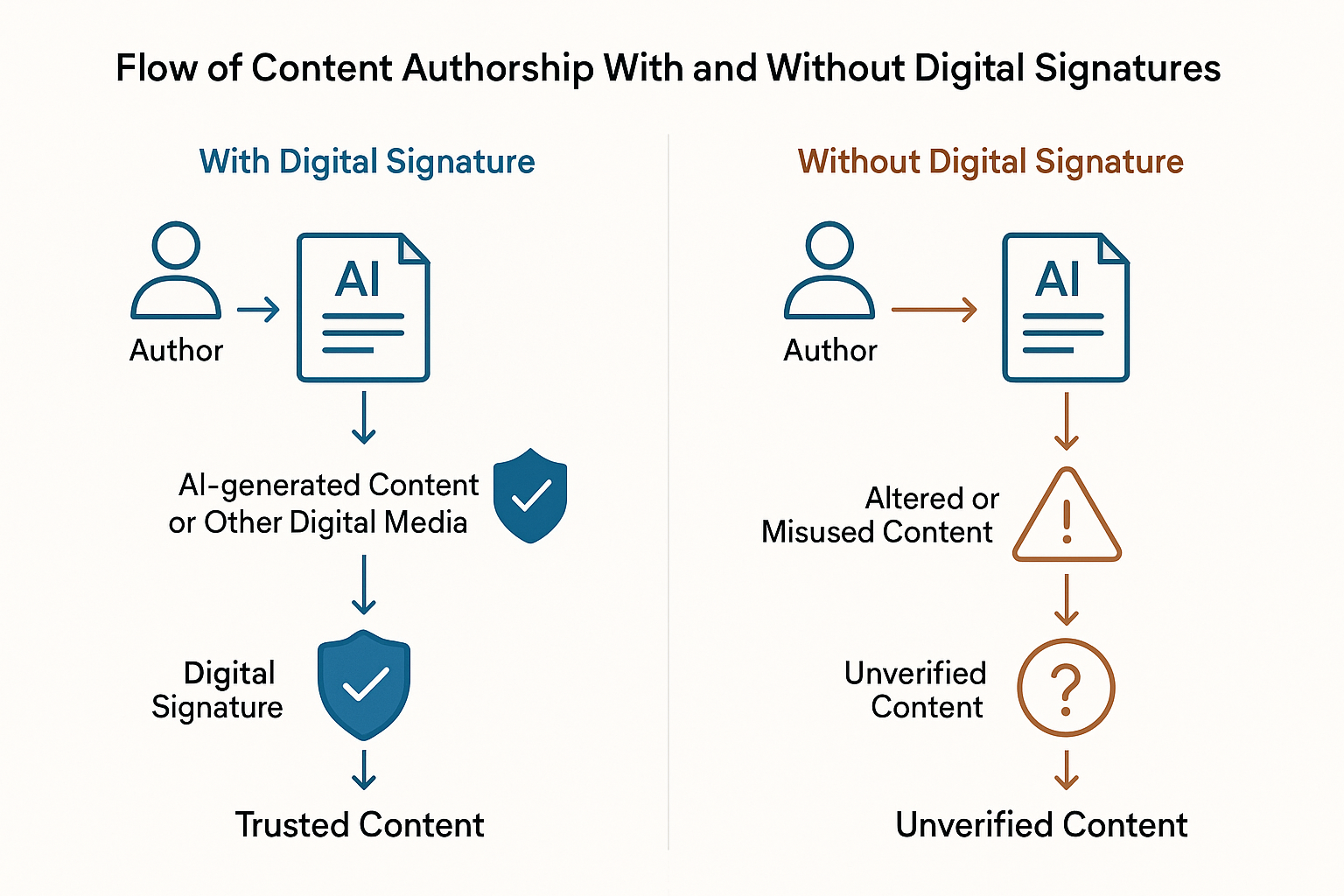

Without digital signatures, the cryptographic fingerprints that confirm authorship, truth becomes negotiable.

Deepfakes and the Collapse of Context

Every deepfake starts as entertainment until it becomes weaponized. From celebrity fabrications to fake war footage, the real danger is not just manipulation, it is momentum.

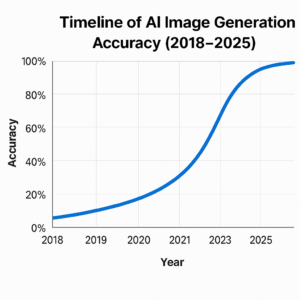

Modern diffusion models can now generate realistic 8K video at 60 frames per second, with inference speeds under 30 seconds. As generation speed increases, misinformation spreads faster than verification can keep up. Once context collapses, trust follows.

Estimated $78B annual economic cost of deepfake-driven fraud and misinformation

The Economic and Social Ripple Effect

Fake content does not just distort perception, it costs real money. IBM’s CyberTrust Index estimates that deepfake fraud and AI-driven scams could reach $25 billion in global losses this year.

Social platforms face mounting moderation costs and growing distrust from users and advertisers. Brands hesitate to appear alongside content they cannot authenticate. This is more than a technology issue, it is a confidence crisis.

Finance worker pays out $25 million after video call with deepfake ‘chief financial officer‘

The Plagiarism Problem and the Paradox of Creation

AI learns by analyzing vast datasets of human creativity. Every prompt draws from millions of original works, but the people behind them are rarely acknowledged.

We have entered an era where machines can create everything but prove nothing.

Digital signatures can change that. They make authorship traceable, royalties fair, and originality visible again.

The Case for Proof: Building Verifiable Reality

Imagine a world where every piece of media carries a small digital proof: a signature that verifies who created it, when, and how it has been altered.

This is already happening. Projects like C2PA, Adobe’s Content Credentials, and Intel’s Trust Authority are embedding provenance data directly into creative tools and compute infrastructure. The aim is not to limit AI creativity, it is to anchor it to truth. With digital signatures, the web regains something fundamental: trust that can be verified, not assumed.

Conclusion: Proof in a Post-Truth World

The six-fingered thief may have been fiction, but his lesson is real. He showed us that the future of AI is not about creating new realities, it is about protecting the one we already have.

In a time when illusion can be automated, truth deserves its own infrastructure. Digital signatures might be the key that restores faith in what we see, hear, and read.