Open Ethernet vs. InfiniBand for AI Fabrics: What’s Different, Why Ethernet Is Surging, and Where Both Still Hurt

AI clusters live or die by their network. When you stitch together thousands of GPUs, the fabric becomes a first-class accelerator: it must deliver brutal bandwidth, ultra-low tail latency, clean congestion control, and predictable job completion times. For years, InfiniBand (IB) owned that story. Today, “Open Ethernet” fabrics—multi-vendor Ethernet with open software (e.g., SONiC) and open standards (e.g., Ultra Ethernet)—are catching up fast and, in many environments, winning.

Below I unpack what each approach really is, why so many organizations are moving to Open Ethernet for AI, and the practical challenges you’ll face either way.

Quick definitions

- InfiniBand (IB)

A purpose-built, lossless HPC/AI interconnect with native RDMA, mature congestion control, and collective offloads. In practice, the modern IB ecosystem is led by NVIDIA (Quantum/Quantum-2/Quantum-X switches, ConnectX/BlueField NICs, SHARP for collectives, GPUDirect RDMA). - Open Ethernet

Ethernet fabrics designed for AI using open standards and a multi-vendor stack: merchant-silicon switches (Broadcom/Marvell), open or dis-aggregated NOS (e.g., SONiC), and open initiatives like the Ultra Ethernet Consortium (UEC). Data movement uses RoCEv2 today and will increasingly adopt UEC’s Ultra Ethernet Transport (UET) tomorrow.

What the latest data says

Independent analysts now report Ethernet gaining the upper hand in AI back-end networks, driven by rapid improvements in lossless Ethernet and the gravitational pull of an open ecosystem. Hyperscalers (and a growing cohort of GPU cloud providers) are scaling enormous AI clusters on RoCEv2; industry groups are hardening Ethernet for AI with new transports and congestion control. Read Here

Meta publicly detailed choosing RoCEv2 for the majority of its AI capacity, and vendors like Dell, Cisco, and others are making lossless Ethernet simpler to deploy and operate at scale (e.g., “roce enable” in Enterprise SONiC profiles). Read Here

UEC’s UEC 1.0 specification introduces UET, a new RDMA-class transport for Ethernet that targets AI/HPC tail-latency and scalability pain points—explicitly aiming to exceed today’s specialized interconnects over time. Read Here

On performance, field tests and customer studies increasingly show small or statistically insignificant gaps between IB and tuned RoCEv2 Ethernet for many generative and inference workloads—important context for cost and supply-chain decisions. Read Here

Why organizations are adopting Open Ethernet for AI

- Ecosystem freedom & supply chain resilience

Multi-vendor optics, switches, and NICs reduce single-vendor exposure and ease procurement at AI scale. Open NOS options (SONiC) and standard optics SKUs align with mainstream DC ops. Read More - Operational familiarity & tooling

Ethernet leverages existing DC skills, monitoring, and automation. SONiC, EVPN, standard telemetry, and familiar operational patterns lower time-to-competence for NetOps/SRE teams. Read More - Convergence with the rest of the data center

You can run AI back-end and east-west “general” traffic on compatible operational models, easing integration with storage, service meshes, and Kubernetes-based MLOps. (Many choose a logically separate AI pod but with shared operational tools.) - Standards momentum (UEC)

The Ultra Ethernet Consortium aligns silicon, NIC, switch, and OS vendors on AI-specific improvements (better tail-latency, scale, collectives). This reduces fragmentation and accelerates feature delivery on Ethernet. - Comparable performance for many AI jobs

With modern congestion control (PFC/ECN), DCB tuning, and NIC offloads, RoCEv2 fabrics can match IB closely in common training/inference patterns—enough for TCO and supply-chain benefits to dominate. Read More - Faster port speeds on the Ethernet roadmap

Ethernet’s merchant silicon roadmap is moving aggressively: 400G and 800G ports are already mainstream, and 1.6 Tbps per-port Ethernet is expected in 2025–2026. That equates to ~200 GB/s per port — and with multiple ports per switch or NIC, aggregate bandwidth per device scales into the multi-terabyte range. InfiniBand, by contrast, is currently shipping at 400 G (NDR) with 800 G (XDR) announced, but Ethernet’s larger ecosystem and optics supply chain mean that 1.6 T Ethernet will likely hit broad commercial availability sooner. This roadmap advantage is one reason hyperscalers and GPU cloud providers are leaning into Open Ethernet fabrics for their largest AI clusters.

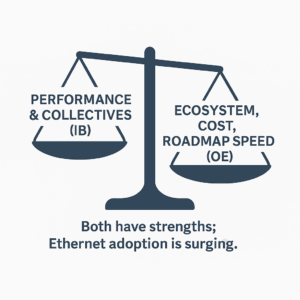

Where InfiniBand still shines

- Mature, vertically integrated stack for HPC/AI with tight coupling between NICs, switches, and software features (e.g., SHARP collectives, adaptive routing).

- Deterministic performance and low tail latency out of the box, with less tuning burden than Ethernet to achieve lossless behavior under brutal incast.

- Proven at extreme scale in classic HPC as well as many AI superpods.

(These strengths are also why vendors position hybrid offerings—e.g., NVIDIA Spectrum-X Ethernet for AI alongside Quantum-X IB.)

InfiniBand vs. Open Ethernet for AI Fabrics

| Feature / Factor | InfiniBand (IB) | Open Ethernet (RoCEv2 → UEC) |

|---|---|---|

| Current Port Speeds | 400G (NDR) shipping; 800G (XDR) announced | 400G / 800G mainstream now |

| Next-Gen Roadmap | 800G rollout (XDR) in progress | 1.6T per port by 2025–2026 |

| Latency | Ultra-low, consistent tail latency with less tuning | Low latency achievable, but depends on precise tuning (PFC, ECN, buffers) |

| Collective Offloads | Mature (e.g., SHARP in-network reduction) | Emerging; UEC roadmap aims to deliver equivalent or better |

| Scalability | Proven at extreme HPC/AI scales | Hyperscale deployments already running RoCEv2 at 10k+ GPUs |

| Ecosystem | Vendor-concentrated (primarily NVIDIA) | Multi-vendor: Broadcom, Marvell, Cisco, Dell, SONiC, UEC |

| Operational Model | Separate stack & tools, HPC-style | Familiar DC operations, SONiC, EVPN, telemetry |

| Supply Chain | Single-vendor optics/switch/NIC dependency | Broad optics/switch/NIC ecosystem, faster availability |

| Cost / TCO | Higher $/Gb; risk of supply bottlenecks | Better $/Gb via global Ethernet economies of scale |

| Adoption Trend | Still strong in HPC & select AI superpods | Rapid adoption by hyperscalers & GPU clouds |

The real-world challenges you’ll face

On Open Ethernet (RoCEv2 today, UEC tomorrow)

- Losslessness is earned, not assumed

You must get PFC, ECN, QoS/priority groups, and buffer profiles exactly right. Misconfigurations cause pause storms or drops that balloon tail latency and kill training throughput. Modern SONiC distributions simplify this, but design discipline matters. - Congestion & incast management

Large all-to-all and many-to-one patterns (e.g., MoE, parameter server bursts) require tight congestion control and scheduling. Expect to validate with traffic-gen and synthetic AI jobs before cutover. - Observability & troubleshooting

You’ll need line-rate telemetry (INT, in-band network telemetry variants), per-flow counters, and GPU-level job tracing to correlate network microbursts with step-time variance. - Evolving standards

UEC 1.0 is here, but multi-vendor UET implementations and interop matrices will mature through 2025–2026; plan for staged adoption (start with RoCEv2; validate UET on pilot fabrics).

On InfiniBand

- Vendor concentration & supply constraints

Excellent tech, but fewer vendor options can translate into lead-time risk and higher costs at scale. Read More - Operational silo

IB typically runs as a separate island with its own tools and skills. For orgs standardized on Ethernet and SONiC, that can slow Day-2 operations and incident response. - Interoperability & convergence

Integrating IB with Ethernet-native storage networks, service fabrics, or shared tooling adds complexity—one reason some operators pick Ethernet for large, converged AI pods even if IB edges it in a micro-benchmark.

Design guidance (pragmatic, not dogmatic)

- If you need fastest-time-to-stable with minimal tuning overhead and you accept a vertically integrated stack, InfiniBand is a solid choice for AI training islands.

- If you value ecosystem choice, DC skill reuse, and long-term TCO, and you’re willing to engineer lossless behavior, Open Ethernet (RoCEv2 now, UEC next) is compelling—and increasingly the default for hyperscale and GPU clouds.

- Many large operators run both: Ethernet for broad AI pods and general DC convergence; IB where they want the most mature collectives/offloads today. Evaluate job completion time (JCT) and tail latency at your scale; don’t rely on single-number throughput.

Conclusion

InfiniBand remains a superb, battle-tested choice with tight integration and minimal tuning. But the center of gravity is shifting: Open Ethernet is winning more AI deployments thanks to ecosystem breadth, operational familiarity, cost/availability, faster port speeds, and closing performance gaps—backed by the industry’s full-court press via the Ultra Ethernet Consortium. If you invest in the right designs and automation, Ethernet gives you performance that’s “good enough” for most AI jobs today and a broader, more flexible runway tomorrow.