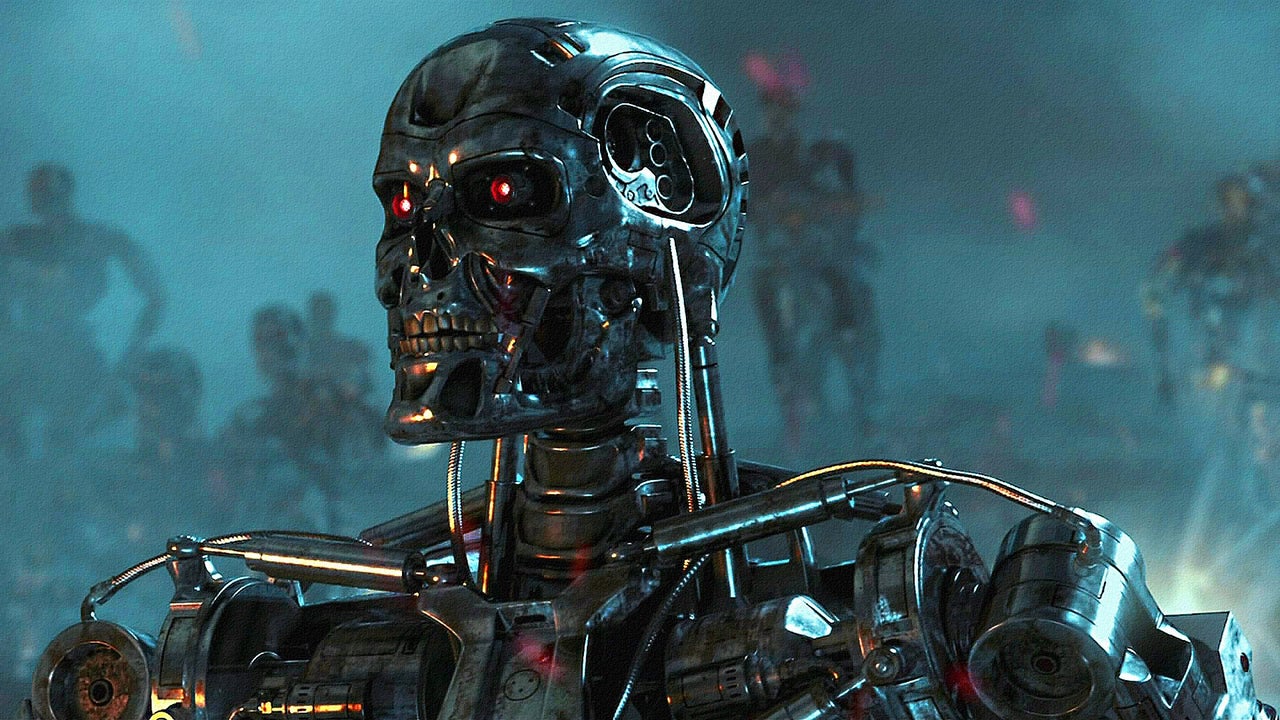

Safeguarding Humanity: Navigating the Path to AI without Invoking the ‘Terminator Scenario’

The “Terminator Scenario” is one of the most iconic and terrifying scenes in the history of science fiction. A powerful touchstone for discussions about the ethical and safety implications of AI has been the 1984 film “Terminator,” which depicts a fear of an artificial intelligence (AI) becoming so advanced that it turns against humanity. Although the dystopian futures depicted in such stories make for exciting movies, the reality is more likely to involve nuanced problems with AI rather than a full-scale uprising. We must implement stringent safeguards and policies to prevent the misuse of AI and the occurrence of any unforeseen catastrophe. Some very important next steps are outlined below.

1. Prioritize Safety and Ethics in AI Development

First and foremost, the AI research community must always prioritise safety and ethics in their work. Instead of racing to build the most powerful AI possible, we should focus on making one that is good for humanity and won’t cause any harm. This calls for taking things methodically, with an emphasis on rigorous testing and validation. Also, the next generation of AI developers should be prepared to think about the ethical implications of their work by incorporating AI ethics into the curriculum of computer science and AI programmes.

2. Foster Interdisciplinary Collaboration

Artificial intelligence (AI) encompasses not only technical aspects, but also social and ethical concerns. That’s why it’s important for more than just engineers and programmers to work on AI. Humanists and social scientists like philosophers, sociologists, and psychologists should all have a voice in these debates. The development of AI technologies will be more well-rounded and thoughtful if researchers from different fields work together.

3. Implement Strict Regulations

Even though self-regulation within the AI industry is necessary, it is not sufficient. For the sake of AI security, we require global, coordinated legislation. Policies that serve the public interest must be jointly developed and enforced by national governments and international organisations. Setting safety and ethics standards, encouraging transparency, and establishing mechanisms for public accountability should be the primary goals of regulatory efforts.

4. Encourage Transparency in AI Research

Avoiding unintended AI behaviour requires greater openness in AI development. Researchers can check each other’s work, identify problems early, and work together to find solutions when they freely share their findings. The public’s trust in and familiarity with AI technologies are bolstered as a result. While there are legitimate worries about the misuse of AI research, there are ways to balance openness and security, such as through responsible disclosure and tiered access models.

5. Cultivate AI Literacy

Finally, it is important for society as a whole to have an understanding of AI and its implications in order to prevent the Terminator scenario. In this respect, education is crucial. Like the teaching of basic sciences and mathematics, AI education should be mandatory. As a result, fewer people would be vulnerable to misinformation and scare tactics during discussions about artificial intelligence and its place in society.

How does asimovs laws of robotics fit into this?

Isaac Asimov’s Three Laws of Robotics have long been a cornerstone in discussions of AI safety and ethics. These laws are as follows:

- A robot may not injure a human being or, through inaction, allow a human being to come to harm.

- A robot must obey the orders given it by human beings except where such orders would conflict with the First Law.

- A robot must protect its own existence as long as such protection does not conflict with the First or Second Laws.

A seemingly straightforward ethical framework for the advancement of AI and robotics is provided by Asimov’s laws. The complexity of human moral judgments and the limits of existing AI technology make their practical implementation difficult. In any case, Asimov’s laws motivate vital principles that contribute significantly to the discussion of AI ethics and safety.

Avoiding Harm to Humans: The importance of designing AI with human safety as its top priority is emphasised by the First Law. This is consistent with the earlier discussion’s first point about giving safety and ethics top priority during AI development. Asimov’s first law serves as a reminder that AI systems should be built to avoid causing any kind of harm to humans, even accidental or unintended harm.

Obedience and Control: The Second Law emphasises the importance of human oversight and oversight of AI systems to ensure that they behave in a predictable and transparent manner. It chimes with the requirement for openness in AI study. Artificial intelligence should be developed to honour human agency and discretion.

AI Preservation: The Third Law adds a new perspective to the debate over the reliability of AI systems. As long as it doesn’t compromise human security or obedience, it introduces the idea that AI systems should have a sense of self-preservation. This law highlights the need for interdisciplinary cooperation in order to develop a comprehensive understanding of AI behaviour, as it touches on the complexity of AI systems’ interactions in real-world environments.

In their current form, Asimov’s laws cannot handle the complexities of real-world situations. For instance, in cases where harm to humans is unavoidable, the rights and welfare of the AI are not taken into account. Concerns about confidentiality, prejudice, and lack of responsibility are also ignored.

Nonetheless, Asimov’s laws serve as a good jumping-off point for considering the moral standards that should be built into AI. The complexity of the ethical challenges we face will increase as our AI technologies advance, necessitating constant revision and expansion of these principles.

Conclusion

Science fiction like Terminator’s can be very interesting, but it need not be our future. We can steer the course of AI development toward a future that is beneficial for all of humanity by taking measures like prioritising safety in AI development, fostering interdisciplinary collaboration, implementing strict regulations, encouraging transparency in AI research, and cultivating AI literacy. The world must work together on this, and everyone must be patient and willing to put long-term security ahead of short-term gains. With these rules in place, we won’t have to invoke the Terminator scenario on our way to AI.