LLMs vs SLMs: Why Smaller Models Could Power the Future of Agentic AI

The AI landscape has been dominated by Large Language Models (LLMs)—massive neural networks trained on trillions of tokens, spanning hundreds of billions of parameters. These models, such as GPT-4 or Claude, have shown remarkable general-purpose intelligence, but they come with steep costs: enormous compute requirements, GPU dependency, and operational overheads that make them inaccessible for many organizations.

Enter the Small Language Model (SLM).

While less flashy in size, SLMs are increasingly becoming the workhorses of Agentic AI—systems designed not just to generate text, but to reason, plan, and act in complex environments.

LLMs vs SLMs: What’s the Difference?

| Feature | LLMs (Large Language Models) | SLMs (Small Language Models) |

| Parameter Count | 70B – 1T+ | 1B – 20B |

| Training Data | Trillions of tokens, broad coverage | Curated, domain-specific |

| Hardware Needs | Multi-GPU clusters, often H200/B200 class GPUs | CPUs or small clusters, lower power GPUs and even laptops |

| Inference Cost | High latency, high power | Low latency, energy-efficient |

| Strengths | General knowledge, broad reasoning | Task-specific efficiency, higher agility |

| Best Use Case | Foundation models, broad chat-bots | Agentic AI, specialized applications, edge computing |

Why Agentic AI Loves SLMs

Agentic AI isn’t about throwing more data at a problem. Instead, it’s about orchestrating reasoning steps, chaining tasks, and interacting with tools. Here’s why SLMs fit perfectly into that model:

- Efficiency in Reasoning

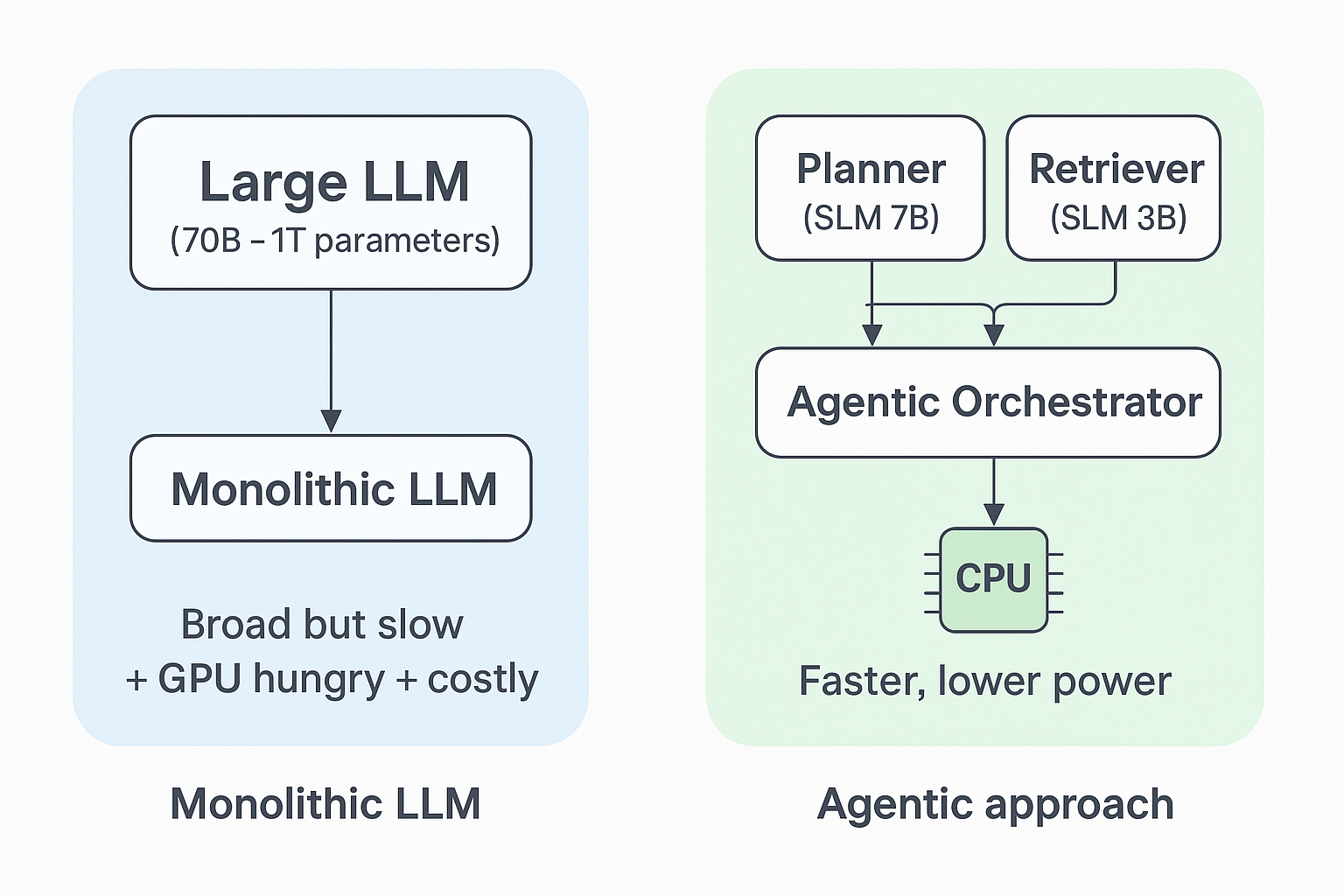

Smaller models can be fine-tuned to execute highly structured reasoning loops. Instead of “thinking” across a massive latent space, they can focus tightly on a given domain, often outperforming larger peers on those tasks. - Composable Intelligence

Instead of a monolithic LLM trying to do everything, Agentic AI can deploy multiple SLMs—each fine-tuned for a role (planning, retrieval, coding, domain expertise). The result is a swarm-like intelligence that’s greater than the sum of its parts. - Faster Iteration, Lower Cost

With fewer parameters, SLMs are cheaper to fine-tune, retrain, and deploy. Organizations can update them frequently to reflect changing knowledge or regulations without retraining a trillion-parameter model. - Alignment & Safety

SLMs can be trained with tighter alignment to specific policies, compliance frameworks, or organizational needs—reducing the risk of “hallucination” that’s common in generalist LLMs.

When SLMs Outperform LLMs

It may sound counterintuitive, but in many contexts, SLMs beat their bigger siblings:

- Domain-Specific Tasks: A 7B-parameter SLM trained on medical literature can outperform a 70B LLM on medical reasoning tasks.

- Latency-Sensitive Applications: In finance, defence, or manufacturing, milliseconds matter. SLMs deliver faster response times without GPU bottlenecks.

- On-Device or Edge AI: Running inference locally reduces latency, avoids data privacy concerns, and removes the need for continuous cloud connectivity.

The takeaway: Bigger isn’t always better. Precision, agility, and efficiency often matter more than raw scale.

CPUs vs GPUs: Why SLMs are Perfect for Lean AI Infrastructure

LLMs have driven the GPU arms race, but that model isn’t sustainable. GPUs consume massive amounts of energy, require specialized infrastructure, and are costly to operate at scale. SLMs, however, unlock a different path:

- CPU-Optimized Performance: With architectures like Intel Xeon featuring Advanced Matrix Extensions (AMX), CPUs can now handle inference for 7B–20B parameter models efficiently.

- Lower Power Footprint: Running SLMs on CPUs uses a fraction of the wattage of GPU clusters, aligning with green AI and sustainability goals.

- Simplified Infrastructure: Many enterprises already have CPU-based infrastructure in their data centers. Deploying SLMs here avoids costly new GPU investments.

In short: SLMs enable Agentic AI on everyday infrastructure—making AI democratized, sustainable, and cost-effective.

The Future: Agentic AI Powered by SLMs

As the industry shifts from “one giant model” thinking to modular, agent-based AI, SLMs will increasingly be the engines behind decision-making, planning, and domain-specific intelligence.

Rather than relying on a single massive LLM running on energy-hungry GPUs, enterprises can orchestrate networks of specialized SLMs running on CPUs. This unlocks:

- Scalable AI without GPU bottlenecks

- Lower TCO for enterprises

- Sustainable AI aligned with power and carbon goals

The next wave of AI won’t just be about scale. It will be about smart scale—where SLMs take center stage as the ideal partner for Agentic AI. SLMs don’t replace LLMs, but they redefine where and how intelligence happens. By combining Agentic AI design with CPU-friendly small models, we can unlock a future where AI is faster, leaner, and more sustainable.