Eight Years After ChatGPT: Why Human Bias in AI Still Persists

When ChatGPT arrived in 2017, it redefined what people thought was possible with artificial intelligence. Conversational models that once seemed futuristic suddenly became part of everyday life. But nearly a decade on, a fundamental question remains unanswered: Can AI ever be free from human bias?

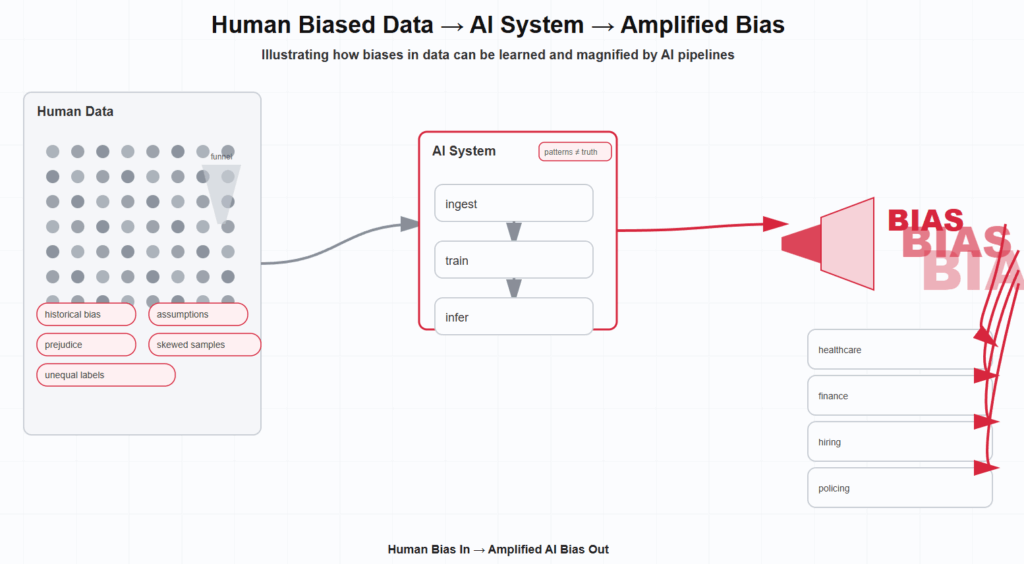

The reality, after eight years of iteration, scaling, and safety innovation, is that AI still reflects the biases of the humans who create, train, and deploy it. Bias hasn’t been eliminated—it has been amplified, shifted, and sometimes obscured. And its impact is especially stark in sensitive industries like healthcare, finance, recruitment, and justice.

This isn’t just an abstract concept for me. During my Master’s Dissertation research, I studied the diagnosis processes for Autism Spectrum Disorder (ASD) and discovered strong evidence of human bias influencing diagnostic outcomes. The criteria themselves were well defined, yet clinicians often interpreted symptoms differently depending on their own perspectives. Gender, socioeconomic background, and cultural context all subtly shaped how a diagnosis was reached. Girls, for example, were often under-diagnosed because their presentation of ASD diverged from the male-oriented “typical” profile that shaped much of the diagnostic framework.

That experience taught me something important: bias is not just a by-product of technology—it starts with us. And when AI learns from our systems, it inevitably learns our flaws as well.

Why Bias Still Persists in AI Models

AI doesn’t invent prejudice on its own. Instead, it inherits the inequities baked into human systems. There are several reasons why bias remains difficult to eliminate:

- Data reflects inequality: Just as ASD diagnostic data reflects skewed clinical judgments, AI datasets mirror societal imbalances.

- Human labeling is subjective: Annotators bring their own cultural and personal perspectives, much like clinicians interpreting behaviors in ASD assessments.

- Optimization favors the majority: Outlier groups—often those already under-served—get marginalized in favor of “average” performance.

- Commercial incentives outweigh fairness: AI is often tuned for profitability or efficiency rather than equitable outcomes.

Healthcare: When Bias Becomes a Health Risk

Healthcare is one of the clearest areas where bias has real, and sometimes severe, consequences.

- Dermatology models under perform on darker skin tones because of under-representation in training data.

- Predictive analytics tools underestimate care needs for Black patients when cost of care is used as a proxy for health need.

- Genomics datasets skew heavily toward European ancestry, reducing reliability for other groups.

- ASD diagnosis demonstrates how bias is embedded even before AI arrives. My own research showed that human interpretation of diagnostic criteria varies widely, and AI trained on these biased records risks reinforcing existing disparities rather than correcting them.

Finance: Bias and Unequal Opportunity

The financial sector increasingly uses AI for credit scoring, loan approvals, and fraud detection. But models often:

- Deny loans disproportionately to certain demographics due to historical redlining embedded in data.

- Flag legitimate transactions more often for minority customers.

- Offer wealth management advice that reinforces conservative strategies, widening gaps rather than closing them.

Instead of democratizing access to financial services, AI risks perpetuating long-standing inequities.

Recruitment: Automating Bias Into Hiring

AI promised to remove prejudice from recruitment, but in practice it often scales it.

- Resumes from women or candidates from nontraditional backgrounds are penalized because past “successful hires” skew male and from elite universities.

- Language-analysis tools score candidates based on tone or word choice, which can vary by culture rather than competence.

- Video-interview AI systems can misinterpret cultural norms in body language or communication style.

Rather than opening doors, recruitment AI can close them even faster.

Law Enforcement and Justice: Bias With High Stakes

AI in policing and justice shows how dangerous bias can be.

- Facial recognition systems have higher error rates for women and people of color, leading to wrongful arrests.

- Predictive policing tools often reinforce existing patterns of over-policing in specific communities.

- Risk assessment algorithms disproportionately label minority defendants as high risk, regardless of actual outcomes.

These examples show how bias in AI doesn’t just cause inconvenience—it can destroy lives.

Why Removing Bias is So Hard

Bias is not simply a technical flaw; it is cultural and human. My work on ASD diagnosis highlighted how much variation comes from human subjectivity. AI reflects the same challenges.

- There is no such thing as a truly “neutral” dataset.

- Trade-offs exist between optimizing for accuracy and ensuring fairness.

- Even definitions of fairness change across time, culture, and context.

In other words, AI doesn’t create bias—it amplifies what already exists.

Paths Forward: Mitigating Bias, Not Eliminating It

While eliminating bias entirely may be impossible, there are practical steps that can reduce harm:

- Broader, more representative training data.

- Independent bias audits.

- Transparency in how AI models are trained and evaluated.

- Involvement of ethicists, domain experts, and affected communities.

- New metrics that measure fairness alongside accuracy.

Eight Years On: Human Bias, Human Responsibility

Eight years since ChatGPT’s debut, AI systems are influencing healthcare, finance, recruitment, and justice. The persistence of bias shows us that this is not just a technical issue but a societal one.

The lesson from my own research into ASD diagnosis is clear: human subjectivity and bias are deeply ingrained in the systems AI learns from. When we expect AI to be “neutral,” we often forget that it is trained on data shaped by people, with all our flaws intact.

If AI is a mirror of humanity, then the responsibility is ours—not just to refine the technology, but to reflect on ourselves. Eliminating bias may never be possible, but mitigating it is both possible and necessary.