NVIDIA RTX PRO 6000 Blackwell: The Swiss-Army GPU for Enterprise AI

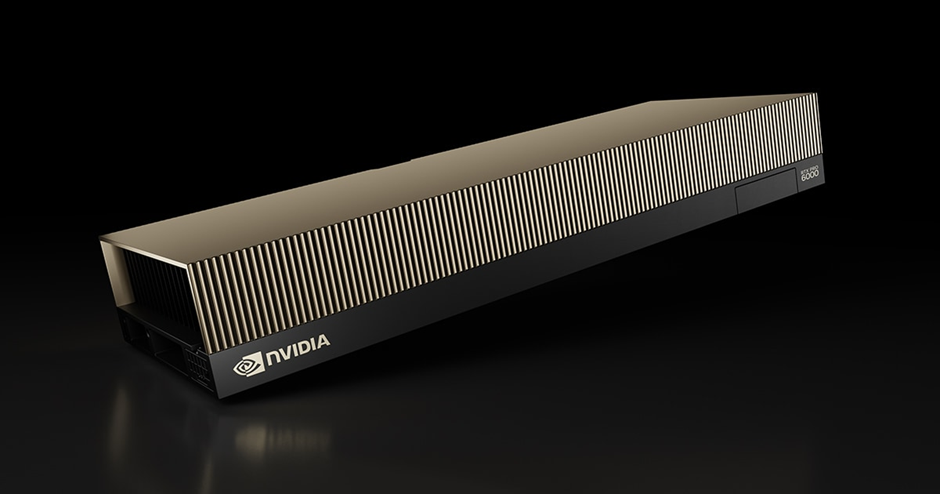

Not to be confused with RTX 6000 Ada (48 GB) or the older RTX A6000 (Ampere), the RTX PRO 6000 Blackwell is NVIDIA’s 96 GB, fifth-gen Tensor Core workhorse designed for both workstations and data-center “RTX PRO Servers.” It’s a very different animal.

Why this card matters

Blackwell introduces 5th-gen Tensor Cores with FP4 (4-bit) support for ultra-efficient LLM inference and high-throughput AI, plus big jumps in classic GPU compute and visualization. In practice, you get up to ~4,000 AI TOPS (effective FP4), 96 GB of GDDR7, and headroom to host larger models per GPU, or to carve the GPU into MIG slices for multi-tenant serving.

Specs at a glance (Workstation / Server Edition)

NVIDIA RTX PRO 6000 Blackwell – Specifications

| Feature | Workstation Edition | Server Edition |

|---|---|---|

| Architecture | Blackwell | Blackwell |

| CUDA Cores | 24,064 | 24,064 |

| Tensor Cores (5th Gen) | 752 | 752 |

| RT Cores | 188 | 188 |

| Memory | 96 GB GDDR7 | 96 GB GDDR7 |

| Memory Bandwidth | ~1.8 TB/s | ~1.6 TB/s |

| AI Performance | Up to ~4,000 TOPS (FP4) | Up to ~4,000 TOPS (FP4) |

| Interconnect | PCIe Gen5 x16 | PCIe Gen5 x16 |

| MIG (Multi-Instance GPU) | No | Yes |

| NVLink | No | No |

| Max Power | Up to 600 W (300 W Max-Q available) | Up to 600 W |

| Form Factor | Dual-slot workstation card | Server GPU (data-center qualified) |

What it’s best at: inference first, selective training second

Inference (its sweet spot)

- LLM serving at low precision (FP4/FP8): 5th-gen Tensor Cores + FP4 make the RTX PRO 6000 ideal for high-QPS text and vision-language inference, agentic AI, and RAG pipelines. The 96 GB pool comfortably holds big model weights at 4-bit while leaving room for KV cache—critical for long contexts and higher batch sizes.

- Multi-tenant serving: The Server Edition supports MIG, letting you split the GPU (e.g., 4×24 GB partitions) to isolate apps or teams on one card. That’s perfect for enterprise platforms running many models/microservices concurrently.

- Enterprise deployment density: NVIDIA’s new RTX PRO Servers let OEMs pack up to eight RTX PRO 6000 Blackwell GPUs per system, giving you serious inference throughput without stepping up to liquid-cooled B-series parts.

Training (where it shines—and where it doesn’t)

- Great for fine-tuning and LoRA on small/medium models, experimentation, and small-batch supervised runs—especially where cost, air-cooling, or rack constraints rule out H100/H200/B-series.

- Not designed for large, multi-GPU model-parallel pre-training. There’s no NVLink; inter-GPU traffic rides PCIe Gen5, which is fine for data-parallel or modest tensor-parallel jobs but much slower than NVLink for massive model sharding. If you’re chasing frontier-scale training, stay with HGX/Hopper/Blackwell server platforms like the H100/H200/B200/B300. (See NVIDIA’s AI Enterprise support matrices and Blackwell docs.)

Real-world deployment patterns

- Private LLM serving & agentic AI: Host a few 70B-class models at FP4/FP8 with room for context and caches, then scale across 2–8 GPUs as traffic grows. MIG partitions help you mix models in one box.

- RAG & analytics: Pair with fast object/file storage and a vector DB; use the GPU for embeddings, rerankers, and response generation on the same node to minimize latency.

- Vision & multimodal: Big VRAM plus 5th-gen Tensor Cores accelerate diffusion/Video-Gen, VLMs, and high-res imaging—often alongside 3D/Omniverse work.

- VDI/compute consolidation: Carve into MIGs for secure isolation and predictable SLAs per team or workload.

How Nutanix is using RTX PRO 6000 Blackwell in GPT-in-a-Box–style stacks

Nutanix’s Enterprise AI and “GPT-in-a-Box” approach bundles infrastructure, orchestration, and NVIDIA AI Enterprise (incl. NIM microservices) so you can stand up private GenAI quickly on-prem or at the edge. In 2025 Nutanix announced inclusion in NVIDIA’s Blackwell enterprise-validated design—aligning its Cloud Infrastructure (NCI), Kubernetes (NKP), and Nutanix AI 2.4 (NAI) with the new RTX PRO/Blackwell stack. That means simpler, supported integration of RTX PRO 6000 Blackwell GPUs for agentic AI, RAG and LLM inference at scale.

On the hardware side, NVIDIA’s RTX PRO Servers (from Cisco, Dell, HPE, Lenovo, Supermicro, etc.) support up to eight RTX PRO 6000 Blackwell GPUs per node, and are now emerging in 2U air-cooled designs as well as 4U—handy for dense, edge-friendly GPT-in-a-Box deployments.

Nutanix’s earlier GPT-in-a-Box 2.0 guidance focused on Ada/Hopper GPUs (L40S, H100), but the Blackwell-validated design extends that path to RTX PRO 6000—keeping the same operational model while upgrading efficiency and VRAM headroom. NIM microservices plus MIG make it straightforward to run multiple model endpoints (chat, vision, embeddings, etc.) on the same GPU fleet.

Sizing tips (rule-of-thumb)

- Single-GPU, low-latency inference: RTX PRO 6000 Blackwell (96 GB) at FP4 or FP8 handles large models with healthy context/batch without host paging. Great “one-box” latency.

- Multi-model consolidation: Use MIG to pin resources per service (e.g., 2×48 GB or 4×24 GB slices). This maps well to Nutanix Enterprise AI and NIM-style endpoint orchestration.

- Throughput scaling: Prefer scale-up to 2–8 GPUs per RTX PRO Server for higher QPS. For massive training or ultra-fast model parallel, move to NVLink/HGX platforms instead.

Conclusion

If your priority is enterprise-grade inference, agentic AI, and fast iteration on fine-tuning—with big VRAM, FP4 efficiency, air-cooling, and mainstream rackability—the RTX PRO 6000 Blackwell hits a rare balance of performance, capacity, and practicality. It’s a particularly good fit for Nutanix GPT-in-a-Box–style deployments, where you want supported, repeatable builds that scale from a single node to a small fleet without jumping to exotic cooling or HBM-class budgets.